OpenAI Operator and the Future of Research Publishing Automation

OpenAI recently introduced Operator, a new class of AI agent that effectively takes control of the browser. Unlike traditional AI models that generate text or process queries in isolation, Operator can act within the web environment—navigating pages, inputting data, and executing tasks across different web applications.

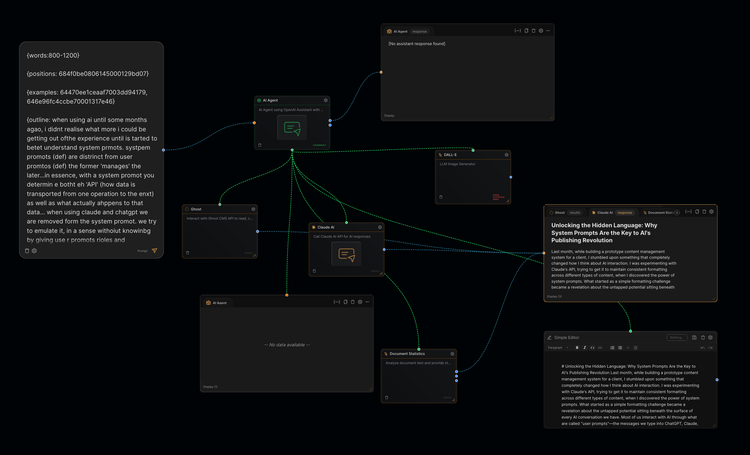

This represents a significant shift. Instead of relying solely on APIs or manual user interaction, Operator can function as an intermediary that automates complex workflows across different platforms. Think of it as an intelligent assistant that can move data between applications, extract key information, validate content, and even optimize records without requiring deep system integrations.

Experiment: Migrating PLOS Metadata into Kotahi

To test Operator’s capabilities, I ran a simple experiment: extracting metadata from a PLOS One article and transferring it into Kotahi as a new submission. The process was slow, but it worked. This experiment highlights an important point—this is the worst Operator will ever be. From here, it will only improve in speed and accuracy.

Now, consider the implications. If an AI agent like Operator can autonomously extract metadata, format it, and transfer it into a submission system, we are looking at the early stages of a fully automated migration tool. The potential here is huge: instead of needing API access to a publisher’s corpus, an Operator-like agent could ‘scrape’ content directly from the web and inject it into any system.

Beyond Migration: Automating Scholarly Data Processing

But let’s push this idea further. Instead of just moving data from one place to another, what if we also built a suite of lightweight web applications to perform specific validation and enrichment tasks along the way? Imagine an automated pipeline that:

- Extracts metadata from data online.

- Runs it against validation engine X to check for errors or missing fields.

- Sends different data types to different validation tools—metadata goes one way, references another.

- Collates the results, refines the record, and improves existing entries.

- Gets a human to act as a final quality control checkpoint.

- Updates sundry other systems as required.

This modular approach would allow for incremental improvements in data quality while minimizing human workload. Instead of designing one massive system, we could deploy multiple small, specialized applications that interact seamlessly with Operator, each handling a specific part of the data processing workflow.

It might be tempting to dismiss Operator as just another scraper bot, but it’s qualitatively different. Here’s why:

- Dynamic Interaction vs. Static Scraping:

Traditional scraper bots are typically hard-coded to extract data from specific web pages, often requiring frequent updates when websites change their layout or structure. Operator, on the other hand, leverages AI to dynamically interact with web pages—navigating complex sites, handling dynamic content, and simulating human-like interactions. This makes it more resilient and adaptable to a variety of web environments. - End-to-End Workflow Automation:

Unlike a basic scraper that simply pulls data from a site, Operator can integrate the scraping process into broader, automated workflows. It doesn’t just extract data; it can validate, format, and even integrate that data directly into other applications or systems. This means you can automate an entire pipeline—from data collection to processing and insertion into your target platform—without manual intervention. - Ease of Integration:

With Operator, there’s no need to wait for a dedicated API from a publisher or invest in building and maintaining custom scraping infrastructure. Its design allows for seamless connectivity with a wide range of services, making it easier to automate the retrieval and integration of unstructured data. This connectivity reduces development overhead and accelerates the time to deployment for complex data workflows. - Intelligent Adaptability:

Operator’s AI-driven nature means it can “learn” from its interactions and adjust its methods based on the specific challenges it encounters on different websites. This contrasts with traditional scraper bots, which often require manual reconfiguration to adapt to new or changing environments. - Logged-In System Access & Data Migration:

A particularly critical capability of Operator is its ability to operate within systems you’re logged into. This is invaluable when migrating data to another system, or manipulating data, especially if your current provider lacks robust features or API tools. By logging into the target platform, Operator can act as an agent with authorized access, leveraging the platform’s own interfaces to extract, manipulate, and transfer data.

In summary, while web scraping itself is not new, Operator stands out by combining intelligent scraping with comprehensive automation. It transforms the process into an end-to-end solution that not only gathers data but also processes and integrates it—making it a significantly more powerful and efficient tool for connecting diverse services and handling unstructured data across platforms.

Other simple but effective use cases include:

- Monitoring and archiving open-access publications: Operator could systematically check various open-access sources for new content, ensuring important research is archived and indexed correctly.

- Enhancing metadata quality for research outputs: By continuously scraping unstructured metadata from multiple sources—conference proceedings, preprint servers, university repositories—Operator could validate and standardize these records, improving their usability and discoverability.

- Automating rights and license checks: By crawling journal websites and repositories, Operator could verify copyright and licensing notices, ensuring compliance with open-access policies.

- Tracking research impact and altmetrics: Operator could scan web mentions, social media, and citation networks to provide deeper insights into how research is being discussed and cited outside traditional academic metrics.

- Cross-referencing with External Sources: The tool could scrape metadata from multiple trusted sources (e.g., Crossref, ORCID, institutional repositories) to fill in missing data, correct author names, and enhance citations.

- Detecting and merging duplicate records across systems: Research outputs are often stored across multiple repositories, institutional archives, and discipline-specific databases without clear connections. Operator could identify duplicate records by comparing metadata fields such as author names, titles, and DOIs, suggest reconciling inconsistencies in metadata.

Implications for Open Scholarly Infrastructure

The potential disruption for scholarly infrastructure as it stands today is enormous. Consider:

- Automated journal migrations: Moving from one publishing platform to another without needing direct API access.

- Metadata enrichment: Cross-referencing and validating data across multiple sources.

- Decentralized publishing workflows: Enabling researchers to manage submissions, peer reviews, and publishing tasks without being tied to a single, monolithic platform.

Rather than treating AI as a bolt-on feature to existing platforms, we should consider how AI-driven agents and microservices can fundamentally reshape how we interact with research data. Instead of monolithic systems which centralize every function into a rigid framework, we could move toward a distributed approach where automation handles data migration, validation, and workflow execution at the request of the user. This would shift the role of the user from manual operator to high-level director—guiding processes, checking results, and intervening when necessary.

By breaking down publishing infrastructure into modular, interoperable services, we can create a system that is more flexible, scalable, and responsive to the needs of researchers and publishers alike. This is not just an optimization of current workflows but a rethinking of what research publishing infrastructure can be.

Member discussion