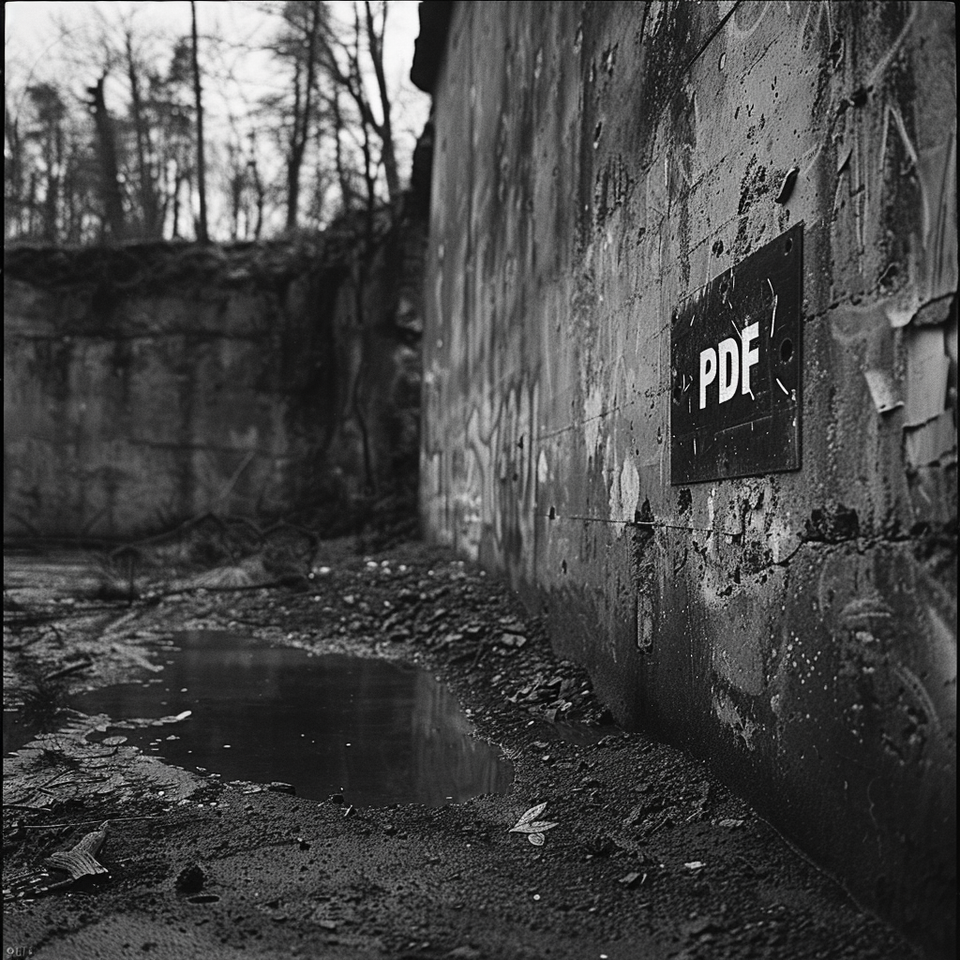

The Urgent Need for an Open Source PDF to HTML/XML Converter in the Preprint Ecosystem

The world of scholarly communication is rapidly evolving, with preprints and preprint servers gaining significant traction. As we enter the second wave of this evolution, preprint review communities are emerging, raising important questions about how to position a document with its review and what to do about metadata?

The key problem lies in the file formats used by preprint servers, which rely heavily on PDF, making it extremely challenging to reconstitute structured content or extract metadata. PDF is a terrible format to work with and reconstructing structured information from a PDF is as Peter Murray-Rust has famously said is like "constructing a hamburger into a cow". The scholarly community has known about the problems of PDF for a long time as attested by the beautifully named 'Beyond the PDF' symposium in 2011 (later to become Force11) which sought to explore richer and more dynamic ways to present scholarly content other than PDF.

So why is it that, from where I sit, one of the most important evolutions of scholarly communications in the last decade – preprint servers and the preprint review/curate ecosystem - is dedicated to PDF? It feels like one of the most important new futures of scholarly communications is taking a giant leap backwards when it comes to file formats. Let it be said, file formats are important and bad file formats already cost the sector millions annually. We are now facing an enormous regression and I'm not sure the preprint community at large is cognizant of how much they want to achieve is going to be costly and slow if we don't do something to remedy the problems PDF is going to increasingly cause.

Ideally preprint servers would change the submission format, but I fear we are too late to achieve that across the entire ecosystem. At least I don't see that change coming quickly (but this idea needs to also have advocacy). So what are we to do?

The Importance of Structured Content

Structured content is crucial for the future of the preprint-review model. As the ecosystem becomes more mature higher production values and more sophisticated data requirements are being placed on this ecosystem. As preprint servers and review communities continue to gain brand value and position their content as authoritative, the need for structured content will become increasingly pressing. However, the preprint ecosystem's reliance on PDF files puts it at a significant disadvantage compared to traditional journal publishing models. Although journals often start with document file formats that have some form of almost-rational underlying markup (e.g., DOCX or LaTeX), preprint servers are in a worse position than journals by relying on PDF.

Coko has demonstrated that it is possible to mostly-automate the process of converting document file formats such as docx into highly structured content (JATS XML). Unfortunately, this is not yet the case for PDF files, which are notoriously difficult to deconstruct into structured document formats like HTML and XML.

The Call for an Open Source Solution

To address this urgent need, we are calling for funding to develop an open source application that can deconstruct arbitrary PDF files into structured HTML and XML. This is not a trivial task and would require a substantial investment over an initial 2-year development period. The process however, will require ongoing maintenance until the day no PDFs are submitted anywhere, as authors will continue to find ways of using PDF to represent content in nuanced ways - requiring ongoing refinement of conversion processes.

We are reaching out to the readers of this blog, who are well-connected and understand the importance of this project, to help us identify potential funding sources.

Many funders may not understand the significance of this project, believe it has already been solved (it hasn't), or think "PDF conversion" isn't a very sexy topic, instead focusing on newer, more glamorous technologies (not to say AI couldn't be part of solving this issue). However, the development of an open source PDF to HTML/XML converter is a critical component in the evolution of the preprint ecosystem and must be addressed now and for this we need your help.

The Benefits of an Open Source PDF Converter

A high-fidelity open source PDF to HTML/XML converter, capable of accurately converting arbitrarily formatted PDF files into structured content would provide numerous benefits to the scholarly communication community:

- Widespread adoption: An open source tool can be adopted by any existing platform.

- Enhanced accessibility: It would provide capabilities to improve the accessibility of preprint content.

- Efficient data extraction: We can better extract data from structured content.

- Flexible display and formatting: We can display and re-format content for various devices more easily.

- Content enhancement and analysis: Structured content can be enhanced and analyzed more effectively.

- Improved interoperability: We will achieve better interoperability between different preprint servers and platforms.

- Reduced reliance on proprietary solutions: We can reduce reliance on proprietary file formats and tools.

- Enriched data ecosystem: It will contribute to an improved data ecosystem in the preprint universe.

By investing in the development of this core open source component, we can ensure that the preprint ecosystem continues to thrive and evolve, ultimately benefiting researchers, institutions, and the public at large.

A Call to Action

I urge the readers of this blog, as well as the wider scholarly communication community, to recognize the importance of this project and help us identify potential funding sources. Together, we can work towards building a more open, accessible, and interoperable future for scholarly communication.

Member discussion